|

Optimizations in a multicore pipeline

November 6, 2014

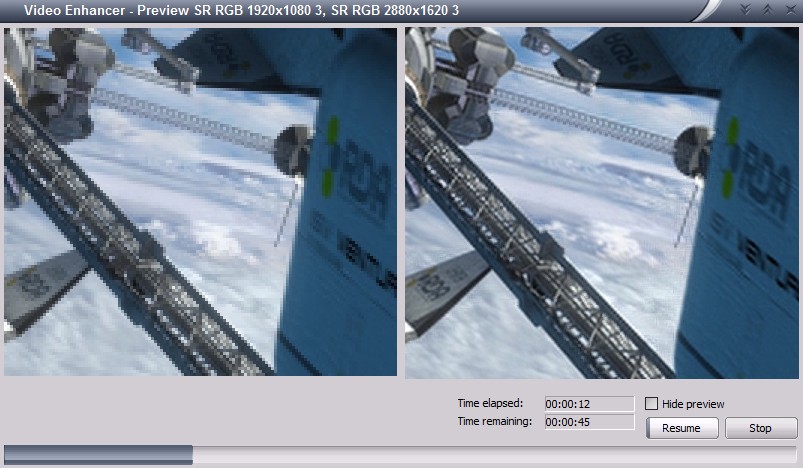

This is a story that happened during the development of

Video Enhancer a few minor versions ago.

It is a video processing application that, when doing its work, shows two

images: "before" and "after", i.e. part of original video frame and the same part

after processing.

It uses DirectShow and has a graph where vertices (called filters) are things like

file reader, audio/video streams splitter, decoders, encoders, a muxer, a file writer

and a number of processing filters, and the graph edges are data streams.

What usually happens is: a reader reads the source video file, splitter splits it in two

streams (audio and video) and splits them by frames, decoder turns compressed frames

into raw bitmaps, a part of bitmap is drawn on screen (the "before"), then

processing filters turn them into stream of different bitmaps (in this case our

Super Resolution filter increases resolution, making each frame bigger),

then a part of processed frame is displayed on screen (the "after"), encoder

compresses the frame and sends to AVI writer that collects frames from both video and audio streams

and writes to an output AVI file.

Doing it in this order sequentially is not very effective because now we usually have multiple CPU

cores and it would be better to use them all. In order to do it special Parallelizer filters were

added to the filter chain. Such filter receives a frame, puts it into a queue and immediately returns.

In another thread it takes frames from this queue and feeds them to downstream filter. In effect,

as soon as the decoder decompressed a frame and gave it to parallelizer it can immediately start

decoding the next frame, and the just decoded frame will be processed in parallel. Similarly,

as soon as a frame is processed the processing filter can immediately start working on the next frame

and the just processed frame will be encoded and written in parallel, on another core. A pipeline!

At some point I noticed this pipeline didn't work as well on my dual core laptop as on quad core desktop,

so I decided to look closer what happens, when and where any unnecessary delays may be. I added some

logging to different parts of the pipeline and, since in text form they weren't too clear, made a converter

into SVG, gaining some interesting pictures.

Read more...

tags: programming video_enhancer directshow

Working with raw video data in C# with DirectShow

October 19, 2013

Too many questions arising on different forums and emails I see revolve around

one theme: how do I access actual video data in a DirectShow app? People want to

save images from their web cams, draw something in the video, analyse it, etc.

But in DirectShow direct work with raw data is encapsulated and hidden inside filters,

the building blocks out of which we build DirectShow graphs. So when dealing with DirectShow

we've got a bunch of filters on our hands and we tell them what to do but we don't

get the actual raw bytes of audio/video data, hence multiple questions, because there

isn't always a suitable filter that does exactly what you need. In some cases we're forced

to create our own filter, but that may be rather complicated and tedious. However many

cases can be solved by using one standard filter which is part of DirectShow itself:

Sample Grabber.

Here's an example of using it to implement a video effect and apply it to a stream of video

from a web camera.

This short tutorial will basically repeat my previous post but this time we'll use C#

instead of C++.

Read more...

tags: directshow

Accessing raw video data in DirectShow

October 18, 2013

In DirectShow one makes multimedia apps by building graphs where nodes (called filters)

process the data (capture or read, convert, compress, write etc.) and graph's edges are streams

of multimedia samples (video frames, chunks of audio, etc.). All the work with raw data

takes place inside the filters, and the host application usually doesn't deal with video/audio

data directly, it only arranges the filters in a graph telling them what to do. This is fine

for some typical tasks when you've got all necessary filters, but sometimes there is no ready

made filter for your task and then you need to create your own filter which may be rather

tedious and complicated. However there are many cases when you only want to peek at the data,

analyse or save some part of it, you don't want a transform that changes media type, so making

your own filter is an overkill. There is a nice standard filter in DirectShow which can

give you access to raw video data (let's stick with just video for now) without creating

your own filters, it's called

Sample Grabber.

MSDN says it's deprecated, but this filter is still available in all versions of Windows

including Windows 8. Even if it goes away some time in the future, recreating it will be very

easy, so your program will not need to change.

In this little tutorial I'll show how to make a small DirectShow app in C++ which takes video

stream from USB camera, applies a simple video effect (accessing raw video data) and shows it

on screen. The idea is simple: make a graph where video stream flows from the camera through

sample grabber to a video renderer. Each time a frame passes the sample grabber it calls my

callback where I manipulate with raw video data before it's sent down the stream for

displaying.

Read more...

tags: directshow

Writing AVI files with x264

Seprember 26, 2012

x264 is an excellent video codec implementing H.264 video compression standard.

One source of its ability to greatly compress video is allowing a compressed

frame to refer to several frames not only in the past but also in the future:

refer to frames following the current one. Such frames are called B-frames (bidirectional),

and even previous generation of video codecs, MPEG4 ASP (like XviD), had this feature,

so it's not particularly new. However AVI file format and Video-for-Windows (VfW)

subsystem predate those, and are designed to work strictly sequentially: frames

are compressed and decomressed in strict order one by one, so a frame can only

use some previous frames to refer to. Such frames are called P-frames (predicted).

For this

reason AVI format does not really suit MPEG4 and H.264 video. However with some

tricks (or should I say hacks) it is still possible to write MPEG4 or H.264 stream

to AVI file, and this is what VfW codecs like XviD and x264vfw do. They buffer some

frames and then output compressed frames with some delay, sometimes combining P and B

frames in single chunks. Appropriate decoder knows this trick and restores proper

frame order so everything works fine.

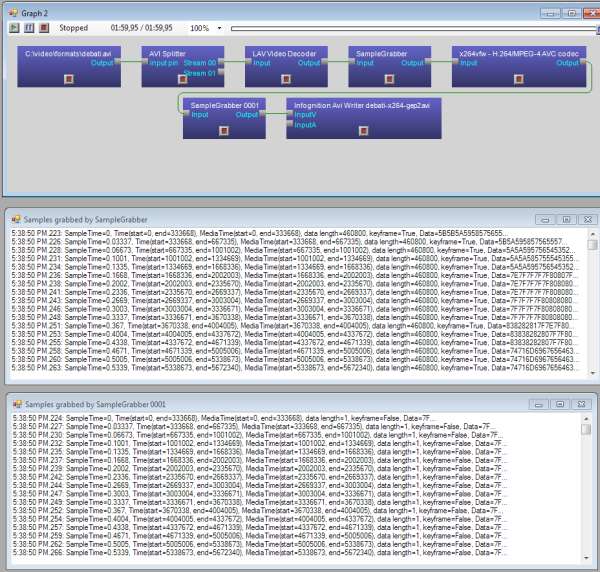

Some time ago we received a complaint from a user of Video Enhancer about x264vfw:

video compressed with this codec to AVI file showed black screen when started playing

and only after jumping forward a few seconds it started to play, but with sound out

of sync. To investigate the issue I made a graph in GraphEditPlus where some video

was compressed with x264vfw and then written to an AVI file. But I also inserted

a Sample Grabber right after the compressor to see what it outputs. Here's what I got:

click to enlarge

It turned out for some reason x264vfw spitted ~50 empty frames, 1 byte in size each,

all marked as delta (P) frames. After those ~50 frames came first key frame and then

normal P frames of sane sizes followed. Those 50 empty frames which were not started

with a key frame (like it is expected to be in AVI file) created the effect: black

screen when just started playing and audio sync issue. I don't know in what version

of x264vfw this behavior started but it's really problematic for writing AVI files.

Fortunately, the solution is very simple. If you open configuration dialog of

x264vfw you can find "Zero Latency" check box. Check it, as well as "VirtualDub hack"

and then it will work properly: will start sending sane data from the first frame,

and resulting AVI file will play fine.

tags: video_enhancer directshow

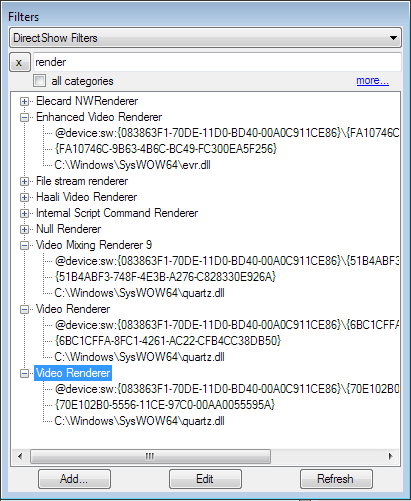

Working with old Video Renderer

August 15, 2012

There are several different video renderers available in DirectShow. When you look at

the list of DirectShow filters you can see two filters named "Video Renderer" among

others.

One of them, with GUID starting with "6BC1..", is VMR-7 (video mixing renderer),

it is the default video renderer on Windows XP and later. The other, with GUID

starting with "70E1..", is the old Video Renderer used by default on earlier versions

of Windows. This filter may bring some surprises even today.

Recently someone reported a crash in VDFilter, our

DirectShow wrapper for VirtualDub filters. He sent us a .grf file, a saved graph

which forced the crash when run. In that graph our filter was connected directly to

the old Video Renderer. After building a similar graph I could reproduce the case,

indeed something went wrong there. First minutes of debugging showed that memory buffer

of a media sample provided by video renderer to upstream filter was smaller than

size of data our filter tried to write here. How could this happen? Usually when two filters

agree on connection at some point the downstream filter (which will receive data) calls

upstream filter's DecideBufferSize() method to ask how big the data samples will be.

It uses this value to create buffers for the samples and provide the buffers to upstream

filter to fill with data. Video Renderer does that, however during playback if its

window doesn't fit into the screen or gets resized Video Renderer tries to renegotiate

connection type and offers media type for the connection with different video dimensions

- according to its window size. If upstream filter accepts such media type then

Video Renderer starts to provide buffers of changed size even without calling

DecideBufferSize(). Our filter wasn't ready for this sneaky behaviour, it continued

to provide amount of data specified in last call to DecideBufferSize(), which caused

overflow of the new shrinked buffers provided by Video Renderer. We had to change

our filter to refuse connection type changes while running (otherwise it would have

to include a resizer to rescale output images to the new dimensions given by

Video Renderer).

Moral of this story: when you create a DirectShow transform filter don't expect output samples

to be the same size you requested in DecideBufferSize() and be ready to be asked for

connection type change during playback!

tags: directshow

Deciphering .grf files

August 10, 2012

In DirectShow we work with graphs of filters. We build them in tools like GraphEdit

or GraphEditPlus while

experimenting and then we build them in our own code. Some parts of graph can be

built automatically by DirectShow's intelligent connect procedure which selects

filters according to their ability to handle given mediatypes and their priorities.

To see details of graph built by our code we can save the graph to a file and then

open it in an editor. Loading a graph from a file is done by calling

IPersistStream::Load() method which performs all the loading logic and either succeeds

or fails, there's not much control over its actions. If the graph was created on a

different machine or the same machine but in different circumstances and

it mentions some filters, devices or even files not available at the moment of

loading, then loading fails and the graph file is pretty much worthless.

Not anymore! Here is a small utility which can read a .grf file and translate it

to plain text containing most of useful information. Now you can easily see

graph details (filters, connections, mediatypes, including all basic info for video

and audio streams) even if you don't have all the mentioned filters and files.

grfdump.zip (134 KB)

It's a command line tool, you run it like

grfdump.exe file.grf > plain_text.txt

and get something like:

Read more...

tags: directshow

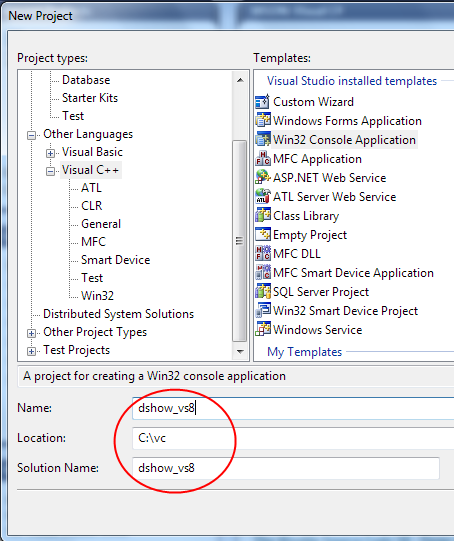

Working with DirectShow in VS2005

May 26, 2010

We regularly receive questions how to build the C++ source code generated by

GraphEditPlus. It generates some code, but in

order to turn it into an executable one needs to create and properly set up

a Visual Studio project.

Here's a short tutorial on how to start working with DirectShow in C++ using

Microsoft Visual Studio 2005. It's also actual for VS2008 and probably VS2010.

To work with DirectShow first of all you need DirectShow headers and libs.

Currently they are included in Windows SDK which can be downloaded from

Microsoft website. If you don't have it installed yet, go install it now and

then come back.

By default it's being installed into

C:\Program Files\Microsoft SDKs\Windows\v7.0. If you install it

to some other place, make appropriate changes to the paths below.

Now, run Visual Studio and create a C++ project.

In this tutorial I'll pick a console application, for simplicity.

Give it a name and choose a path:

Read more...

tags: directshow

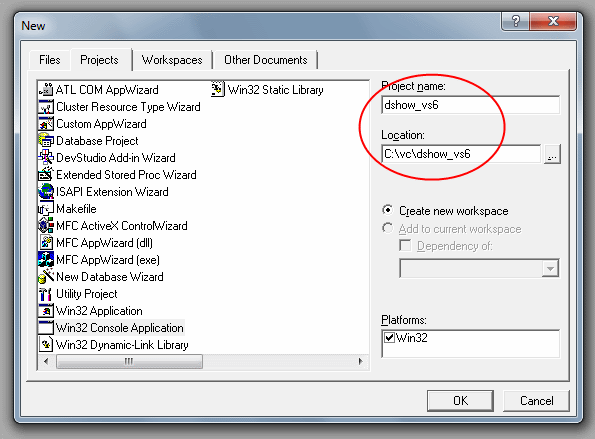

Working with DirectShow in VS6

May 25, 2010

Although Visual Studio 6 was made in previous century it is still widely used

by C++ developers around the world. Probably because of good support of MFC

(which was screwed in later versions) or probably because later versions

weren't much better as IDE for writing C++, or because they introduced

unwanted difficulties with runtime libraries. Whatever the real reason for using

now 12-years old IDE is, we still receive questions on how to use code

generated by GraphEditPlus in VS6.

To work with DirectShow first of all you need DirectShow headers and libs.

Currently they are included in Windows SDK. A few years ago they were part of

DirectX SDK. When we're working with DirectShow in VS6 we still use the old

DirectX 9 SDK, which we have installed in C:\DXSDK folder. If you have the

headers and libs installed into other place, change your paths appropriately.

So, let's create a simplest DirectShow app. Make a new project, let it be

a Win32 Console Application. Give it a name and choose a path:

Read more...

tags: directshow

|